This post aims to cram in a synopsis of Jeremy Howard’s talk at the inaugural Data Science Melbourne MeetUp at Inspire9 in Richmond on 12th May so may be a little disjointed in it’s flow. Jeremy freestyled his delivery once he had established from the members pretty quickly with a show of hands what it was he should be talking about.

There is a lack of intelligence from computers and data and what is at stake is not the proof of things we already know but knowing about the things we did not think of from data or what we should be questioning. This is where machine learning asks the computer to come up with some of the intelligence for you. Not as a replacement for human interpretation but as a compliment to it in the way of tools such as Google Prediction API.

Using machine learning to find the interesting insights and adding value is the huge appeal Jeremy finds in machine learning and to explain this, he kicked off with talking about Arthur Samuel who essentially came up with machine learning and invented what appeared to be the world’s first self-learning program. Samuel was pretty bad at chequers so decided to write a program in 1956 where the computer played against itself which outlined the different styles of play in the program and set out the parameters for play.

Whilst watching the machine play itself he placed an optimisation algorithm on top of the program and eventually ended up with a program that was able to beat him. By 1962 the computer had become so good that it had beat the US Champion. At the time Samuel worked at IBM and when it was announced to the market that they had come up with a program which could play chequers and was able to program itself IBM shares jumped 15%.

Today a lot of Google’s products that we use run on machine learning starting with it’s PageRank algorithm, a learning algorithm which changed the way we search. Translation tools use learning a little further down the line by scraping masses of websites which have already been translated by humans before using a machine learning algorithm which uses Statistical language translation. Other good examples of the use of machine learning is by insurance companies when deciding on quote prices and banks when making decisions on credit.

Jeremy says that he spent some time trying to convince a large Australian finance provider to use an algorithm to make credit decisions and demonstrated it’s effectiveness by back testing it against the manual lending decisions. This company was adamant that an algorithm would not pick up the small things in loan applications that human assessors could see yet later went bankrupt after failing to correctly predict their loan losses.

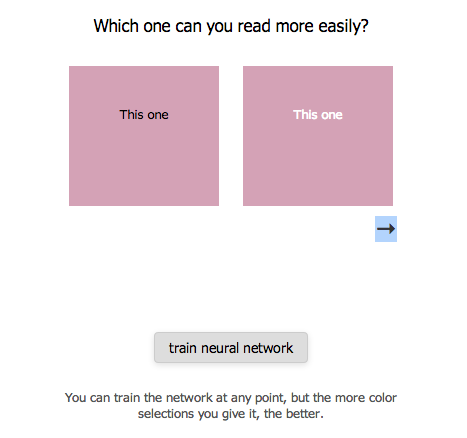

Training a Neural Network

This example of machine learning is a small programme used to train a neural network. Deciding on how to determine whether a pair of colours is a good contrast for human perception or not is pretty difficult and years of research have created libraries that can be used to handle for you how much contrast human visual perception will see between two colours. The guy that wrote this programme asked the user to input examples or which of the two contrasts you can read more easily and rather than using rules it trains a neural network which in Jeremy’s demonstration used his labels for information. The javascript program then shows examples of pairs of colours indicating what the neural network that he had trained thinks is the best colour against the official formula allowing the programmer to replace years of research and manual programming with a machine learning algorithm (neural network)

Neural networks are huge maps or computational models of layers of interconnected neurons which are very different to rule based programming and are inspired by the neural network in an animals central nervous system. A neural network relies on input to activate neurons before it is weighted (as determined by the programme) and passed on to the next layer of neurons until an output neuron is activated. The repeated exposure to input allows the network to be trained to recognise input.

When people talk about neural networks nowadays they generally mean deep learning neural networks with many layers. For example Google’s self driving car which uses a series of machine learning algorithms as it would be practically impossible for a human to write the rules on how a car should be driven. Howard also said that a prime example of machine learning was Watson winning Jeopardy and beating the two best players that have ever played the game. This worked by using something similar to a deep learning network which read the entirety of wikipedia. However the reading was not done with a set of rules but in ‘a far more fussy sense’ – in other words using algorithms to connect related material.

Jeremy also touched on the application of machine learning in cancer detection. The example given was from a medical journal where slides from cases of breast cancer were sent out to pathologists independently who mark the Mitosis which are the bits the pathologists looks for indicating cell duplication. The marked up images which were returned became the data source for a machine learning competition and it turned out that the winning algorithm was more in agreement with the pattern of the pathologists than any other individual pathologist. The point being that there are some things that people are better at than machines but this was the first time that an algorithm had been developed that could look at something better than humans.

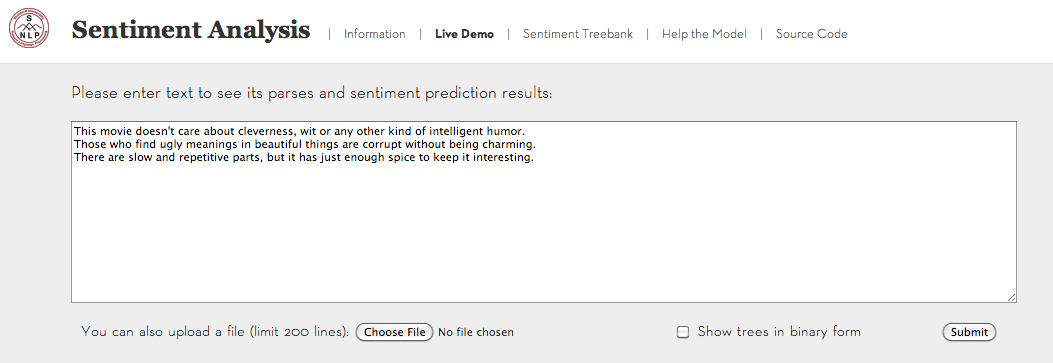

Another challenging area of machine learning is sentiment analysis and some work that comes out of Stanford was shown which tries to figure out whether a sentence is positive or negative in sentiment. Looking at the example below from the landing page shows that doing this kind of thing is more difficult than just counting up the amount of happy words against the amount of sad words, more about how the English language is structured. Jeremy explained that whilst we are still a long way from truly understanding how language works for sentiment analysis, this algorithm uses machine learning and parses sentences to try and work out what the sentences are about.

Whilst all of these are good examples of how machine learning is used for practical and hypothetical purposes, in 2012 the first Kaggle competition to be won using a Deep Learning algorithm determined which molecules in a set turned out to be toxic. The competition was mentioned in a New York Times article and was won by a Scientist called Geoffrey Hinton, who essentially invented Deep Learning. The important thing about winning this competition is that the team involved in winning this competition had no prior training in biology, life sciences or molecular research and in two weeks they had coded the world’s best medical algorithm with a small set of data.

The pattern is astonishing as Jeremy says it seems like every time someone points Deep Learning at something it turns out to be the worlds best way of doing something.

At about the same time Microsoft’s Chief Research Officer Rick Rashid demonstrated speech translation software at a conference in China via live machine translation. The deep neural networks which are used in the real time translation mean that the errors in spoken and written translation are significantly reduced and when the machine starts translating into spoken Chinese, the crowd breaks out into spontaneous applause. Jeremy joked that believe it or not standing ovations don’t usually happen at machine learning conferences but we were all free to prove him wrong!

[youtube=http://youtu.be/Nu-nlQqFCKg]

Most of the jobs today in the western world are in services which involve reading and writing, speaking and listening, looking at things and knowledge. Deep learning is now roughly as good as humans at a lots of these things and moving fast – in fact we are able to do things about 16,000 faster than just two to three years ago. Clearly there is going to be significant disruption with the evolution of Machine Learning (especially Deep Learning) is evolving exponentially and that will continue to happen, Jeremy says, and as we give problems more computing power and more data, there will be no known limits on how it can be used and the shocking thing over the next few years is how many jobs computers will be able to do better than us in the coming years.

Jeremy outlined the real world high level state of machine learning by saying that the kind of displacement we are likely to witness has happened before in human history with the Industrial Revolution. Prior to this productivity was very low and humans were inputting their own (or animals) energy into processes until the steam engine changed our world when there was a huge increase in productivity until we got to a point where everything that could be automated was automated before the graph more or less levels out after the revolution. This caused a lot of labour displacement and created poverty and social strife.

The difference with the machine learning revolution which Jeremy predicts is that he does not believe that there is any flattening out of where it can take humankind. Unlike the Industrial Revolution, he says, it is not a step change. We will get to the stage where machines are processing all the thoughts and intellectual work that humans can do but would not stop there, by definition machines would become better than human which makes the entire notion of machine learning both exciting and scary.

Jeremy’s presentation was an excellent start to the Data Science Melbourne group and left the bar at a very high setting for future speakers. At the very least it gave a very real if at times disturbing insight into a field of computer science which already has the momentum to profoundly change the world we live in. It’s not the stuff of science fiction either, it seems like we only need to do a little bit more work before the machines will be taking care of our evolution for us through Deep Learning.