Stanford University Computer Systems Colloquium hold live talks throughout the academic year which are open to the public and last Tuesday saw Carey Nachenberg of Symantec and UCLA CS as guest speaker presenting to an audience of mixed backgrounds and levels of experience with ‘Deep Learning for Dummies’.

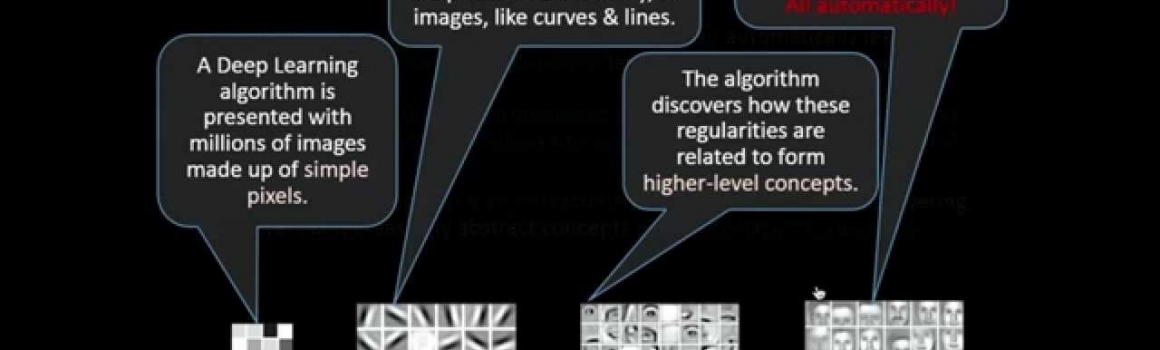

The aim of the talk was to give a high level idea of what deep learning is, starting from machine learning and decision trees and how deep learning figures things out automatically.

The roadmap of this talk starts from the Ising model (Wilhelm Lenz) from 1922 which studied lattice models of atoms and the correlation (weight) between the behaviour of atoms and how pairs of atoms can be aligned so will flip depending on the correlation or anti correlation of energy between them and closely aligned atoms exhibit similar behaviour. Nachenberg demonstrates the building of a histogram with the possible outcomes of atom flips (up or down) in a system of three atoms and how the histogram allows you to then work out the probability of a certain outcome appearing and then goes on to 3d models and the building blocks of deep learning and deep neural networks.

Nachenberg goes on to talk about the work of Geoffrey Hinton and Terry Sejnowski who together pioneered the application of learning algorithms in speech and vision and talks about their experimentation in the 1980s with the Ising model (Restricted Boltzmann Machines (RBMs)). Hinton and Sejnowski’s work centred around teaching these networks to encode a memory using a gradient descent algorithm and this talk also looks into what is happening to the correlation between atoms in examples during the gradient descent algorithm.

The eureka part of the talk for deep learning as we know it is how the gradient descent algorithm recognises the same atoms that are in the ‘on’ state across multiple images from training images and how the regularities are key in a network reconstructing training data in order to identify images.