Deep Learning at Baidu is all the rage at the moment, that is, at least until they are out-algorithmed by another research laboratory, perhaps with more compute power. This is, of course, all part of what I was talking about last week when I wrote about the report in the Wall Street Journal on Baidu posting the world’s best results on the ImageNet dataset, allowing its custom-built supercomputer, Minwa, to stretch its legs.

Distinguished Scientist at Baidu, Dr.Ren Wu, spoke at the GPU Conference prior to the result about the hardware and software co-design which allowed Minwa to hold up to 0.6 petaflops of theoretical performance.

His work falls under the remit of Baidu’s Institute of Deep Learning (IDL) which compliments other areas of work in artificial intelligence at Baidu, like it’s Singapore based laboratory, Baidu Infocomm Research Centre (BIRC), focussing on natural language processing for South East Asian languages.

Whilst the focus of Dr.Wu’s current work is image recognition, his background in heterogeneous computing is critical to the thinking behind bringing the power of deep learning to billions. Consumers will always demand improved new user experiences but the challenge is being able to deliver this whilst reducing power consumption and data centre running costs. High performance computing comes first but heterogeneous architecture enables this performance to be delivered reasonably to consumers.

Yann Le Cunn from Facebook AI Research has noted previously that the ImageNet test is starting to become ‘passe as a benchmark’ as other laboratories seem to focus on the algorithm’s ability to represent complex functions as more easily digestible coefficients, an investment which means the approach pattern for heterogeneous architectures may not be so complex.

Wu says the inspiration for Baidu’s philosophy behind Deep Learning comes from legendary figures throughout Chinese history. Military General Sun Tzu, who authored the Art of War (which is good train reading by the way and great to prepare you for a challenging day regardless of what your job is!) said ‘more calculations win, few calculations lose’ whilst Historian Ban Gu said ‘the more you see, the more you know.’ At Baidu, this translates as Big Data.

The third inspiration for Baidu’s work is Chinese Philosopher Mencius, who said that it is the ability to see the fine detail that makes you smart. Sub-topic but the top quote of his on Goodreads was “Friendship is one mind in two bodies”, which I thought was pretty cute.

So with the philosophy chosen, what about the hardware? Minwa uses 72 Intel Xeon E5-2620 processors and 144 Nvidia Tesla K40m GPUs giving it the ability to process around 0.6 petaflops of data. For the software optimisation, Wu says

We have also optimized the software to a great extent, in our case, 32 GPUs will give us 25x performance compared to a single GPU and this is wall clock time, in other words this is the time that the training can merge into certain accuracy levels so this is the most rigorous measurement you can do for parallel software.

Because you can never have enough training examples, Baidu also augments its data by producing several iterations of the same image to mimic the variance of observers and how the images would appear through color and light filtering, distortion and cropping, covering off Philosopher Mencius’ teachings.

This process turns a sample of 1.5 million images into 90 billion images for training which Wu says makes the neural networks much less sensitive to overfitting.

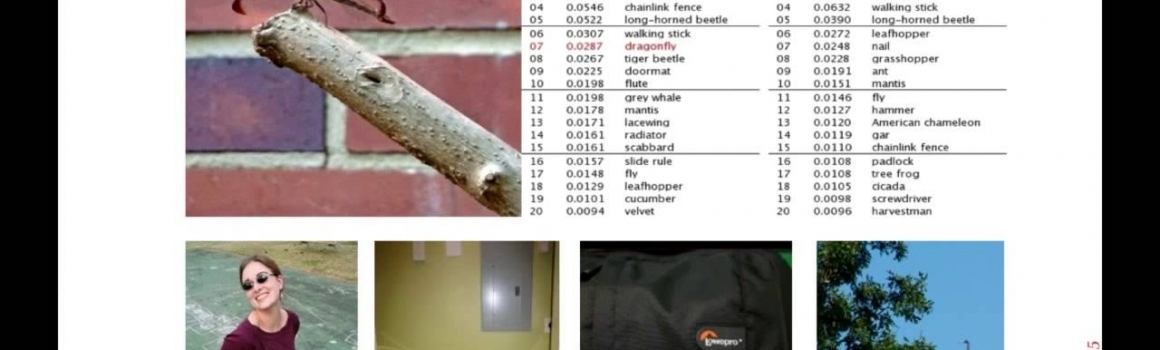

In some of the examples that are shown in Wu’s talk, it is really easy to see how some of the work that Baidu are doing is closing the gap between human error in labelling images and perfect labelling. Look out for the bathtubs or woman on the tricycle, types of images that could easily be mislabeled by humans.

Follow me on Twitter for more prodding around the Deep Learning ecosystem.