The leaves are rustling at Project Oxford, the Microsoft venture which offers a portfolio of Rest APIs and SDKs which give developers the tools to be able to add intelligent services to their applications without having to spend vast resources on creating their own machine learning capabilities in order to showcase features like facial or speech recognition.

Microsoft has released the suite of services in Beta which gives developers the chance to automate processes, which would otherwise be beyond the bounds of practicality, using some of the same techniques which Microsoft has used for Azure Machine Learning.

Dr.Harry Shum demonstrated Project Oxford at Build 2015 this week showcasing the need that Project Oxford is able to address. The example shows how submitting a photo of two random players in a cricket team to the web service created using Project Oxford’s APIs is able to return the names of the players using facial recognition allowing you then to search further information on the search subject using speech recognition integration on the app.

Programme Manager, Ryan Galgon explains, “As we built these APIs, against Harry’s joke about ‘so easy, even a Microsoft Executive can use it’, things like detection and identification, very complicated models in the back-end, very hard to do but with just two lines of code, we have one line for detection, one line to identify it make it very easy to include these in your application.”

Project Oxford is split into three main services, Vision APIs, Face APIs and Speech APIs plus a service called LUIS (language understanding intelligent service) which is more about sentiment analysis and is currently on private preview.

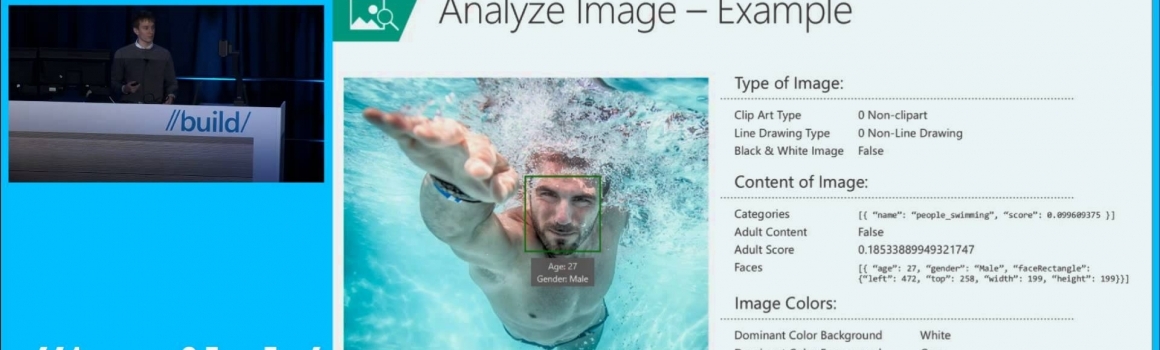

The Vision API includes the Analyse Image service which provides a set of features which describe an image such as type of image, content of image and image colours. It also has Object Character Recognition to identify text and language within images and Smart Thumbnail for cropping relevant content into the frame without losing it if it is offset.

Face API detection recognises attributes in an image and is able to output things like age and gender using facial landmarks whilst the verification feature will give a confidence score on two comparable images.

Bing powers the Speech APIs which include Voice Recognition (speech to text) with support for seven different languages and Voice Output (text to speech). Voice Recognition has two modes, short form and long form which deal with audio files in 15 second and 2 minute chunks respectively and can be accessed either by a set of Rest APIs or via a set of client libraries using a websocket connection to stream results back. Voice Output currently supports seventeen different languages.