Daeil Kim is a Data Scientist at the New York Times, a role which has crystallised his research work into the niche between Machine Learning and Journalism. Kim spoke at the New York Data Science Meetup last week about how to make journalists work easier by using Machine Learning, a Bayesian perspective on big data and a discreet section on non journalistic related Machine Learning at the NYT.

Kim talks about an algorithm which he developed to support journalist, Hiroko Tabushi (Go to 3:15) reporting on faulty Takata airbags in trying to establish which sort of accidents may have been as a result of or involved faulty airbags from the manufacturer. Tabushi used data from the National Highway Traffic Safety Administration (NHTSA) database which contained over 30,000 accident comments which Tabushi sifted through labelling 2219 as being suspicious, or perhaps involving the airbags.

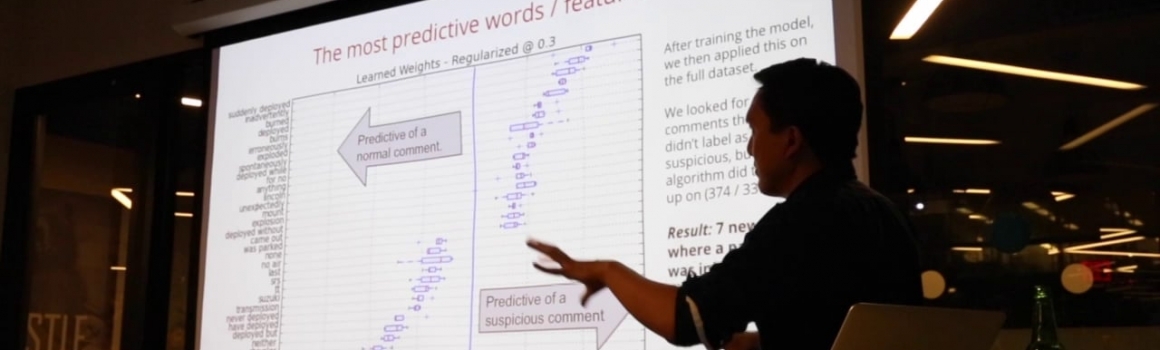

The text from the comments was tokenised for word combinations and then filtered to create a feature space for a prediction problem. More than 56,000 features were identified and then looked at for frequencies in order to prepare the data for training and look at the frequency of features in suspicious and non suspicious comments. The algorithm was 97% accurate but only to Tabushi’s labelling which meant that the algorithm was able to identify suspicious comments on top of what had been labelled initially.

Kim goes on to talk about topic modelling (latent Dirichlet allocation) (go to 12:13) and extracting keywords out of specific topics in separate documents to create probability distributions which is extremely useful for the large amount of documents held by the NYT. Latent Dirichlet allocation (go to 16:18) is the generative model which is able to explain why parts of data in a certain set is similar without being observed and Kim goes on to talk about Bayes Theorem and the probability of new models given certain data.

Putting all this into practice, Kim created a web application along with Ben Swanson from MIT Media (go to 23.34) called refinery which is an open source project focussing on data visualisation which would allow journalists to upload zip files and extract data and phrases in specific topics.

The pre-question final and sensitive part of the talk (go to 29:47) looks into some of the challenges they are working on at the NYT which can use machine learning, such as generating a more loyal readership and creating an international following. Kim also talks about collaborative filtering which is to harness the power of the existing readership by recommending the most relevant articles based on past habits which ultimately all helps with the bottom line and pushes the notion that machine learning can become central to a business model in harnessing the future success of news organisations.